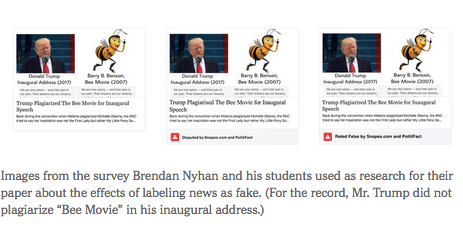

Since the 2016 presidential campaign, Facebook has taken a number of actions to prevent the continued distribution of false news articles on its platform, most notably by labeling articles rated as false or misleading by fact checkers as “disputed.”

But how effective are these measures?

To date, Facebook has offered little information to the public other than a recent email to fact checkers asserting that labeled articles receive 80 percent fewer impressions. But more data is necessary to determine the success of these efforts. Research (including my own) suggests the need to carefully evaluate the effectiveness of Facebook’s interventions.

Yale’s Gordon Pennycook and David Rand offer two principal warnings. First, the effects of exposure to false information are not easily countered by labeling, as they find in a paper they wrote with Tyrone D. Cannon. False information we have previously encountered feels more familiar, producing a feeling of fluency that causes us to rate it as more accurate than information we have not seen before. This effect persists even when Facebook-style warnings label a headline “disputed.” We should be cautious about assuming that labels tagging articles as false are enough to prevent misinformation on social media from affecting people’s beliefs.

In a second paper, Mr. Pennycook and Mr. Rand find that the presence of “disputed” labels causes study participants to rate unlabeled false stories as slightly more accurate — an “implied truth” effect. If Facebook is seen as taking responsibility for the accuracy of information in its news feed through labeling, readers could start assuming that unlabeled stories have survived scrutiny from fact checkers (which is rarely correct — there are far too many for humans to check everything).